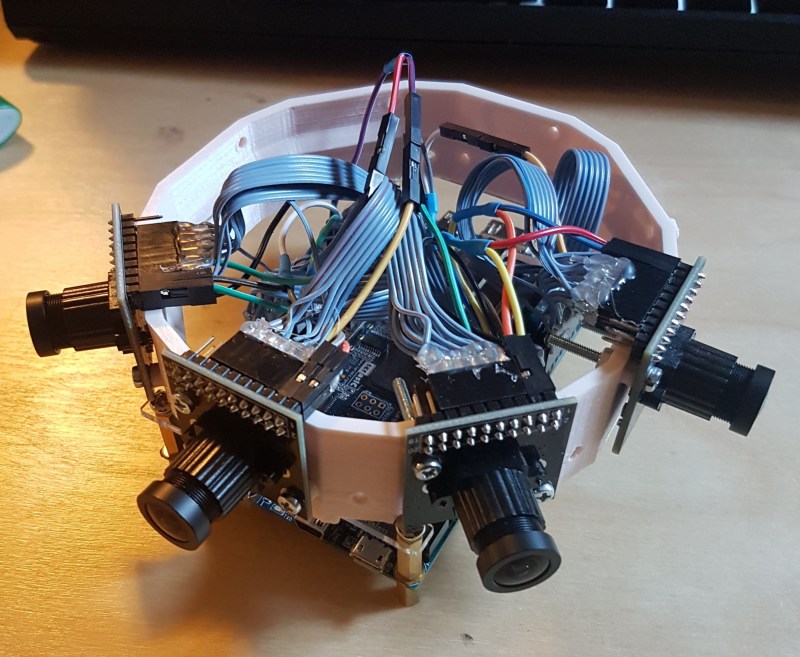

VR is in vogue, but getting on board requires a steep upfront cost. Hackaday.io user [Colin Pate] felt that $800 was a bit much for even the cheapest commercial 360-degree 3D camera, so he thought: ‘why not make my own for half that price?’

[Pate] knew he’d need a lot of bandwidth and many GPIO ports for the camera array, so he searched out the Altera Cyclone V SOC FPGA and a Terasic DE10-Nano development board to host it. At present, he has four Uctronics OV5642 cameras on his rig, chosen for their extensive documentation and support. The camera mount itself is a 3D-printed octagon so eight of the OC5642 can capture a full 360-degree photo.

Next: producing an image!

[Pate] is achieving the stereoscopic effect by mounting each camera 64 mm apart — the average distance between a person’s eyes — and blending the overlapping the fields of view of each camera.  Unfortunately for him, it took until purchasing his third round of lenses to find a set that worked — notwithstanding some effort in calibrating them and some programming finesse to ensure the images would stitch together properly. So far, [Pate] has successfully captured a 200-degree stereoscopic image, and we’re excited to see the full finished product!

Unfortunately for him, it took until purchasing his third round of lenses to find a set that worked — notwithstanding some effort in calibrating them and some programming finesse to ensure the images would stitch together properly. So far, [Pate] has successfully captured a 200-degree stereoscopic image, and we’re excited to see the full finished product!

There are ways to capture stereoscopic images with only one camera, but it requires a little smoke and mirrors, or in this case, two cleverly mounted cameras with fish-eye lenses.

“There are ways to capture stereoscopic images with only one camera, but it requires a little smoke and mirrors, or in this case, two cleverly mounted cameras with fish-eye lenses.”

??????

For certain exceptionally large values of “one camera” that include “two cleverly mounted cameras,” I guess…?

Yes, values of one up to one point nine recurring.

Presumably, they mean one camera pointing in a given direction.

You can do stereoscopic with one camera plus smoke and mirrors, minus the smoke.

http://www.steves-digicams.com/news/3D%20Mirrors.jpg

That’s a narrow FOV though, and not relevant here.

Plus using a DSLR and a rig like that is a bit big and cumbersome.

However, they now sell that same setup as a small attachment for cellphone cameras on the usual Chinese sites for close to 5 to 10 bux, although I’m not sure they use surface mirrors for that. And of course you won’t get real quality like a dedicated rig and DSLR, but maybe you can just get a 3D printer running and buy some decent surface mirrors and make a copy that works better on action/cellphone cameras.

Also maybe take every other frame at say 15-20fps and it stays 3D as long as you keep moving and don’t move too fast.

Just move your camera back and forth really quickly.

Does anyone remember a guy did that with the old logitech quickcam??? He was able to process “shaky hand” clips from the cam into high resolution images with depth too I think.

I got the impression it was militarily useful and suppressed, although a decade later google was doing stuff almost as advanced, but not really exposing their methods. There was other academic papers on the topic that went dark/missing in the same timeframe.

It was on the web late 99 or earlier and disappeared soon after “9/11”, I have periodic hunts for it.

Vaguely – I do know the technique well tho, it’s called Structure from Motion – and a shaky camera is a fine feed, as the main requirement is you have enough overlap in subsequent images to be able to do the math – its not suppressed or hidden,Googz scholar coughs a lot up — https://scholar.google.com/scholar?q=structure+from+motion&hl=en&as_sdt=0&as_vis=1&oi=scholart&sa=X&ved=0ahUKEwinuo7GlpnXAhUF_IMKHdlCAdgQgQMIJjAA

Shaky cams means blurry images normally, although now that we finally have optical image stabilization become a thing in small cameras that kind of thing might work, but certainly not with webcams which never have OIS (nor with RASPI cams that also lacks that feature). And even with processing a blur tends to remain a blur really. And I’ve tried a few software packages that often made wild claims and then turn out to not be able to overcome the really motion-blurred stuff..

Mind you even in shaky footage there will be an occasional clear image, that you can then use as a basis to try to get more data from the blurry ones, but with real limitations.

Anyway it was done with the kinnect too and people also did it with cellphones.

Talking of which, did you hear that MS has decided to stop manufacturing the kinnect? It’s odd how not just MS but everybody dropped the movement capture gaming franchise while simultaneously the VR/AR thing is taking off, which you would think MS could tag onto their old depth capture stuff, but nope.

This actually reminds me of the new anti-ghosting of monitors they do now where they blink the LED back-lighting at twice or more the refresh rate to make your eyes not see the ghosting.

Sounds clever and effective (and a variation of the old film camera butterfly shutter in a way), although I can’t say I tested it myself for effectiveness.

A couple more cameras for the top and bottom hemispheres and an orbital motion combined with accelerometer data and you could have much better object depth mapping and add some degree of positional mapping (dependant upon orbital width) and rotational mapping as well. A lot more complicated and much larger image files, as they would no longer be just rendered on a spherical surface, but projected from an actual 3d point cloud. hardware limitations would be the frame rate and effective shutter speed of the camera.

Oh. This is a rabbit hole that will lead well beyond the $800 depth.

Never mind. Great job! Enjoy the satisfaction of building your own $800 analog.

Thanks a lot for the write up! I’m currently working on redoing the stitching based on optical flow, which I recently learned about from Facebook’s open-source Surround 360 camera. It’s a lot more complicated, but it should provide a much more seamless 3D image than the basic feathering that I’m doing right now.

Out of curiosity, how are you handling the lens calibration and math ? OpenCV ?